t-hacking

Beware picking the timeframe to fit the narrative

You are reading Economic Forces, a free weekly newsletter on economics, especially price theory, without the politics. Economic Forces arrives weekly in the inboxes of nearly 7,000 subscribers. You can support our newsletter by sharing this free post or becoming a paid subscriber:

One should always be skeptical of any words coming out of a politician’s mouth, but especially when they are talking about data. It is simply too easy to frame data in a technically true way but a highly misleading way based on the dates chosen. One recent example from Biden on Twitter:

Biden touting the “largest deficit reduction in American history” is a true statement. But the implication that Biden is a deficit hawk is predicated on a very special starting or comparison date. In this case, it was a reduction from 2020 levels, which had major Covid spending and thus a large deficit. Should we really be proud of Biden for not keeping up with 2020 levels of spending? One could just as easily say the deficit was double what it was just five years earlier. Twitter added context that the deficit was still 41% higher than pre-Covid. All three framings are true but depend on picking different comparison dates to tell the story and leave the audience with different impressions.

This newsletter isn’t about politicians but about economic research. I promise. But politicians give a good extreme example of the problem that picking a comparison or starting data for any trend in the data is fairly arbitrary.

I first came across this idea in Thomas Sowell’s Knowledge and Decisions about the “ease of misstatement” when it comes to voters and economic statistics.

For example, as of 1960, the growth rate of the American economy could be anywhere from 2.0 percent per annum to 4.7 percent per annum, depending upon one's arbitrary choice of the base year from which to begin counting.

Sowell goes on to explain 1960 wasn’t unique. For the two elections before it (1952 and 1956), the possible ranges of average growth rate were between 1.3 and 5.3 percent and 2.1 and 5.1 percent. Given average nominal GDP growth was around 3.0 over the mid-20th century, you can tell a story about how the U.S. is sluggish under the current president (if you’re running against him) or that the economy is booming (if you’re the incumbent). The same data can be manipulated to tell your narrative.

There is a reason you will see a lot of this within politics. The cost of voter knowledge is high, and it is especially easy to mislead. How much do voters think about deficits or economic growth?

But you will also see this in academic work, which is (hopefully) not trying to mislead readers in the same way that politicians are. Still, within academic work, we are often telling a story. In empirical work, it can be a story about trends we see in the data. Unemployment is up. Dynamism is down. Productivity is stalled.

The problem we need to be aware of is that the exact story being told can be quite sensitive to the dates picked. For work that isn’t economic history, the ending date is often somewhat fixed at the latest available data but that still leaves the proper starting date open to interpretation.

When we can pick the starting date for a comparison after seeing the data, we are effectively engaging in p-hacking, or what I’m calling t-hacking. The “t” is for time. Get it?

p-hacking

Most people are well aware of the concept of “p-hacking.” Each statistical test tells you that there is a probability, p, of obtaining test results at least as extreme as the result actually observed if the assumed null hypothesis—such as that there is no correlation between the two variables—is correct. P-hacking is when you run a bunch of these regressions and pick out the “good” ones, which are the ones with the proper p-value for what you want, such as p<0.05.

Most of the time, we aren’t worried about scientists explicitly “hacking” things and lying about results. It is much more innocent. Journals usually don’t like publishing null results. X does not correlate/cause Y is not very sexy. So researchers search for things that do correlate in some way.

There are two major problems with this common approach. First, it invalidates the whole idea of p-values. The idea of p-values is predicated on the assumption that you only run one regression or do one test. There are ways to make “fix” this for multiple regressions, but it is the basic idea of p-values.

More generally, running tons of regressions until you find p<0.05 leads to lots of false positives, which are situations where you think you have found a relationship but in reality, it isn’t actually there. False positives are inevitable in any line of inquiry, but extra false positives will come up in many common approaches to data, not just statistical significance testing, as I will explain below.

Sensitive to Starting Dates

Let’s consider a major macroeconomic question that has received a lot of attention in the previous few years. Are profits in the US rising? If so, by how much?

This is a hot topic because many people have an impulse to think that profits are bad. Profits are part of the pie that could be going to workers in the form of wages. If the profit share is up, workers are getting screwed!

A famous paper by Simcha Barkai studies the rise of the profit share and the decline of labor and capital shares. According to Barkai’s main specification, the profit share rose by 13.5 percentage points. Profits were over $1.2 trillion higher than they would have been if the shares had not changed. That’s trillion with a t. That’s a big number. This amounts to around $14,600 for each of the 81 million employees in the nonfinancial corporate sector.

Notice that the paragraph above reads perfectly coherently (or it would if a better writer wrote it), but I never said what the starting date was. 13.5 percentage points since when? 1984. While 1984 was not the lowest profit share on record (1985 and 1986 were lower), 1984 is still in a trough on the long-run series, which amplifies the trend Barkai is reporting.

There’s nothing nefarious about reporting the biggest change you see in the data. But I think it’s worth keeping in mind that this number is selected from the bottom of a long data series. The story that most readers (myself included) will take from a statistic is that we could expect wages and capital returns to be $1.2 trillion higher today if we somehow fix the things that have gone wrong.

Is it realistic to return to the most extreme point in the data? For a period after college, I had lost my football weight, and I weighed 175 pounds. I will let you in on a secret. I weigh more than that today. I cut down for a weight loss competition and drastically lost a lot of weight quickly and then gained it back.

So what’s the right goal today for my weight? It’s probably an unrealistic (though admirable) goal to shoot for 175 since I’ve never been able to maintain that weight. The full time-series has relevant information.

A new short paper by Anton Bobrov and James Traina, both at the San Francisco Fed, investigates the longer time series on the Barkai profit share but not much longer. They aren’t going back to 1950 to find the profit share. Instead, they ask, how sensitive is Barkai’s number to the exact 1984 start date? Thanks to Barkai’s replication package, Bobrov and Traina are able to go back just three years and compare the trends.

They find that rolling the starting date back just three years accounts for 26% of the increase in profits over the past 30 years. Bobrov and Traina’s extension of the time series for profit share is in red in the graph below.

Even in this picture, the trend of profits is clearly upward, but the magnitude is significantly reduced. Therefore, the problem, again under the presumption the profits are a problem, is significantly reduced under this ever-so-slightly-longer timeline.

Ryan Decker ran a simpler version of Barkai’s calculation going back to 1940. If we look at the longer time series, we can see that the early 1980s was an outlier in terms of profit rates and that profit rates today are actually lower than in the 1960s and 1970s, not to mention the 1940s and 1950s. Here we can really see that the early 1980s were a trough in the profit rate.

In the case of calculating shares of income, since calculating returns to capital requires using interest rates, the returns to capital fluctuate wildly with the interest rates. Since profit shares are the residual after capital and labor shares, profit rates will fluctuate too. Again from Ryan Decker, we can see interest rates and profit share on the same plot. The rise of interest rates in the 1980s is plausibly part of the story.

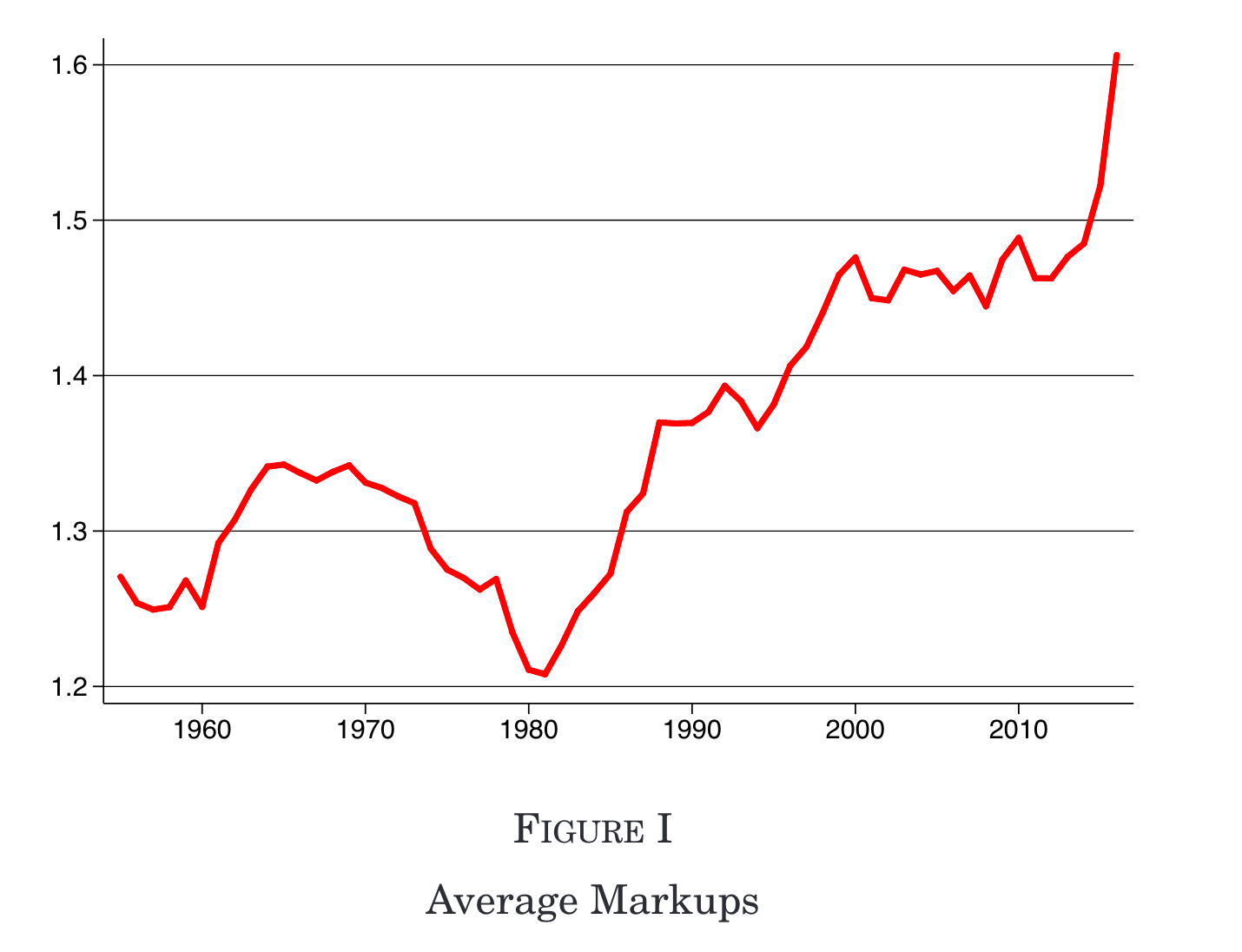

Let me give another example that I know well and have talked about before: markups and the famous 2020 QJE paper by Jan De Loecker, Jan Eeckhout, and Gabriel Unger.

The authors state in the abstract that “In 1980, aggregate markups start to rise from 21% above marginal cost to 61% now.” Why compare it to 1980? That’s the lowest markups that ever existed in their dataset, which means this is the greatest increase one could possibly find in the data. This is similar to any article that focuses on the strongest correlation they find in the data.

Let me repeat. Nothing they do with the starting dates is nefarious. The authors are very transparent with the full-time series. They even use the word “start” in the sentence in the abstract that I quoted above, which makes it clear that the earlier 1980s represented a reversal of a previous trend.

The problem is, as always, there is no real solution, only trade-offs. As I said, any date is fairly arbitrary. Some are better than others. Surely, here we want to end with the most recent data available, which the authors did. It would be more misleading to say markups were flat from 2000 to 2012. But is it obvious that 1980 is a better comparison than 1965?

In the cases mentioned, we lose a lot of information when we compare a start and end date, compared to seeing the whole time series. But saying markups increased from 1.2 to 1.6 or profits increased by $1.2 trillion per year is a lot easier to digest than a full series. Information is costly.

These papers are not unique. In fact, it shows how even careful papers can run into this issue since everyone needs a punchline number for readers to walk away with, which can hide a lot of subtlety. That further gets lost as the research moves from pure academic into the public discussion, as these papers have. I’m simply using them since Borbov and Traina released their paper recently, and I know the DEU paper well. And we all fall into it, although maybe “fall” into isn’t even the right word since it’s impossible to avoid completely.

I need to be careful about t-hacking too. I’m sure I do it all the time and need to improve. For one example that is sort of t-hacking and sort of p-hacking, in my newsletter on minimum wages, I argued that I’d like to see the longer-term effects of minimum wages since we would expect the real problems to arise in the long-term since people who didn’t get jobs didn’t accumulate human capital and thus saw lower wages for years forward. If I’m being honest with how I think about economics, which I desperately try to do with this newsletter, maybe that is just me moving the goalposts. Maybe I am searching for the proper t or the proper regression to confirm my prior that minimum wages hurt low-wage workers who lose their jobs.

Instead of pointing fingers or offering a solution, I just want us to think about more the problem of t-hacking. Once we recognize it, we will see it all over the place, especially in public debates. Violence is up, but only relative to recent lows. Religiosity is down, but only relative to historic highs. Recognizing the longer-term trends does not mean that policy couldn’t push trends in a better direction. But it does humble us a bit. Can we really expect historic lows or highs to persist forever into the future? For GDP, probably. For profit shares, probably not.

Good point on the double S of “Selective Start dates” distorting tbe findings.

In U.K., I happen to have tracked income inequality over the past 2-3 centuries.

Absolutely not deflating into fictitious £s. Retaining the actual money pay of the day,

And using Pareto analysis to get the upper shape. And good U.K. wage statistics since 1968.

Conclusion for C20th is that the U.K. experienced highly atypical income compression 1948-78.

Fully restored pay differentials took equally 30 years to 2000-10.

Thatcher elected by desparate working class in 1979, no coincidence nadir maximum compression.

For “beware the timeframe to fit the narrative” point, most U.K. income inequality studied start 1970s

Ignorance of prior history makes the authors think their starting point is normal. In fact atypical.

The 1970s in U.K. so atypical that 2mn of best Emigrated. (See GDR 1950s, Venezuela, Zimbabwe).

Birth rate declined sharply as no future seen. Study all printed words showed gloom worse than WW2

Decadal productivity growth collapsed by one third from 1950s and 1960s.

Key point is that all these indicators reversed during 1980s & 1990s. With restoration of pay inequality

The 30 year V-shape down to 1979, fully reversed with reversal to usual pay differentials.

Lesson for “Selective Start dates” is check widely around, both economic & social data, if is typical.

A fair starting date will be in typical period, or made typical by using several year trend averages.

Great post! I like the football weight analogy especially. A base year always needs to be chosen, but the key is that choice is defended. There are a bunch of ways to do this. The first, as the authors do in the QJE article, is to state why a year is picked as a base year. Another is a sensitivity analysis, where the starting year is moved forward/backward ~5 years to show the results are similar. A third is to pick a year that provides a intuitive cutoff, for example 1945 or 1989. While politicians can get away with just picking the base year that makes them look best, peer review will generally require a robust defense.